February 27, 2007 5:05 PM

Preventing Employee Issues

Today, I've been sitting all day (except for class) in a seminar put on by my university's Office of Compliance and Equity Management, called "Preventing Employee Issues". The presenter is a lawyer who defends organizations against lawsuits from employees on grounds of discrimination, harassment, unlawful termination, medical difficulties, and so on. The idea is that we in attendance might learn how to do our jobs more effectively, both from the perspective of our employer and from the perspective of our employees. The thought of faculty in my department as my "employees" makes me chuckle almost as much as it would make them, but the legal effect is the same.

This is the sort of event that I would never have been asked to attend as a faculty member. And so this is the sort of post I would never written before, and the sort that my friend Joe could probably do without! While I'd rather have been writing code, I have found the seminar quite interesting. I've always enjoyed listening to lawyers talk about the law, and this would have been a bit of fun even if it didn't apply more directly to my current work duties.

I'm surprised at just how much of the advice we've received is simply good practice for how any person should live and treat others. It's almost a re-telling of Fulghum's dicta:

- Communicate clearly. Tell people what you expect. Give specific, concrete feedback.

- Be consistent. Treat everyone the same way. Apply rules the same way across similar situations.

- Don't make promises you can't keep.

- Do your homework. Do due diligence when you hire, evaluate, and terminate employees.

- Treat people with respect.

- Be accountable. Take responsibility for your actions. Admit mistakes and correct them.

- Don't retaliate.

- Follow the rules. Set a good example. Model expected behavior.

These seem like good behaviors for most any part of one's life. They remain important when you are a manager, whether at a university or in a software house. The lawyer's essential point is that they become even more important for supervisors and organizations, because they create environments in which employees can work reasonably and thus in which employers have met their legal obligations to employees.

One stream of advice running throughout the presentation is: Document. Document everything. Document, document, document. Documentation creates the historical record that supports the actions a manager has taken, should those actions ever be called into question. Indeed, even if you prefer not to follow the "good behavior" guidelines above for moral or ethical reasons, you might decide to do so cynically because they create a living documentation that you try to create a reasonable working environment. This is one situation where the law, both statute and case law, create a "market" in which good behavior has value beyond a person's own belief system.

The biggest thing I learned is this regard is the importance of documentation even when you don't think you need it. For example, when I became a department head, I became supervisor to the department secretary, whom I consider to be meeting the expectations of her position. My university recommends but does not require that I do an annual performance evaluation. The secretary and I discussed it, and we figured that there wasn't much of a need to go through all of the paperwork that an annual performance evaluation. But now I realize that I do need to do the evaluation annually -- in case I ever need to in the future. Suppose that our current secretary leaves and we hire a new one. The new person exhibits a pattern of performance problems, so I decide to do the annual performance evaluation to help the secretary improve and to begin to document the problems. The very fact that I did not evaluate the previous secretary could come back to haunt me, because the new employee could allege that I was treating him or unfairly just by evaluating them! This could be spun as a form of harassment. This may seem like a stretch, but we know how the law can work sometimes, especially when a jury comes into play. The safest thing for me to do is "do the right thing" and evaluate my current secretary, to set the right example and to create a track record of doing so.

I'm trying to think of a way in which I can relate this to a principle of software design or programming; it seems like the sort of idea that bridges many kinds of activity. Maybe this is similar writing tests for code that you "know can't break", because one of these days it might, or following the practices of a methodology such as XP even when they seem like overkill. These have the sense of "do it anyway" but perhaps not the same sense of "historical record". Oh, well.

Another stream is that there are a lot of laws that matter. FERPA, OSHA, and ERISA are just the beginning. I'm glad that my university should and does provide support to us front-line managers. One benefit of this sort of seminar is learning what kinds of situations trigger issues that need to be addressed.

Perhaps the most immediate result of attending the seminar is to have a small sense of unease about my recent roles on a couple of search committees. Have we done our due diligence? Has the university done what the committee didn't? Yikes. I'd better be sure that we know what we need to know about our candidates.

February 26, 2007 6:00 PM

Forming a New Old Habit

I've been frustrated in the last week by my inability to run.

After finally recovering from my downtime, I worked in a week of 3-milers, and then flew to Arizona for ChiliPLoP. In Carefree, I was able to run twice, extending my time on the road in an effort to rebuild stamina. While I wasn't quite ready to circle Black's Mountain yet, I was able to enjoy the beautiful terrain of the February desert. The hills made it a bit difficult for me to estimate distance from my times, but I did manage to run for 43 and 41 minutes, respectively.

Then came the frustration. After the second of my Carefree runs, I lost a few days to the last day of the conference and the travel home. I'm willing to run under crazy circumstances, but driving home until 3 AM makes it tough to run at 5 AM... Then I caught up on work and sleep for a couple of days. Just as I was ready to run, we were hit by some inclement weather. I've gone on record for running in the cold, but there is one kind of weather that causes me pause: ice. Cold can be addressed with layers -- more and better layers of clothing. But a two-inch layer of ice, glassy and transparent, is a whole different matter. Even the most dedicated runners have to adjust.

But I am ready to run again, so it will happen. This morning, I circumvented the ice by running indoors. Sticking with my plan for a slow and steady return, I ran only four miles and did my best to avoid the speed-up that come almost naturally when I am running short laps in the presence of others.

Establishing new habits, even ones that used to be old habits, takes time. Running offers a physical reminder of this, via sore muscles and fatigue. But with time, the habit returns to match the desire to run.

February 23, 2007 7:58 PM

**p++^=q++=*r---s

I thought about calling this piece "The Case Against C" but figured that this legal expression in the language makes a reasonably good case on its own. Besides, that is the name of a old paper by P.J. Moylan from which I grabbed the expression. ( PDF | HTML, minus footers with quotes) When I first ran across a reference to this paper, I thought it might make a good post for my attempt at the Week of Science, but by the author's own admission this is more diatribe than science.

C is a medium-level language combining

the power of assembly language with

the readability of assembly language.

I finally finished reading the paper on my way home from ChiliPLoP, and it presented a well-reasoned argument that computer scientists and programmers consider using languages that incorporate advances in programming languages since the creation of C. He doesn't diss C, and even professes an admiration for it; rather he speaks to specific features about which we know much more now than we did in the early 1970s. I ended up collecting a few good quotes, like the one above, and a few good facts, trivia, and guidelines. I'll share some of the more notable with you.

Facts, Trivia, and Guidelines

- One of the features of C that is behind the times is its weak support for modular code. C supports separate

compilation of modules, but Moylan reminds us that modularity is really about information hiding and abstraction. In

this regard, C's system is lacking. Moylan gives a very nice description of ten practices that one can adopt in order

to build effective modular programs in C, ranging from technical advice such as "Exactly one header file per module.",

"Every module must import its own header file, as a consistency check.", and "The compiler warning 'function call

without prototype" should be enabled, and any warning should be treated as an error." to team practices such as

"Ideally, programmers working in a team should not have access to one another's source files. They should share only

object modules and header files." He is not optimistic about the consistent use of these rules, though:

Now, the obvious difficulty with these rules is that few people will stick to them, because the compiler does not enforce them. ... And, what is worse, it takes only one programmer in a team to break the modularity of a project, and to force the rest of the team to waste time with grep and with mysterious errors caused by unexpected side-effects.

- Moylan gives a simple example that as concisely as possible how C's #include directive can lead to a program that is in an inconsistent state because some modules which should have been re-compiled were not. The remedy of always recompiling everything is obviously unattractive to anyone working on a large system.

- Conventional wisdom says that C compilers produce faster code than compilers for other things. Moylan objects on

several grounds, including the lack of any substantial recent evidence for the conventional wisdom. He closes with my

favorite piece of trivia from the paper:

It is true that C compilers produced better code, in many cases, than the Fortran compilers of the early 1970s. This was because of the very close relationship between the C language and PDP-11 assembly language. (Constructs like *p++ in C have the same justification as the three-way IF of Fortran II: they exploit a special feature of the instruction set architecture of one particular processor.) If your processor is not a PDP-11, this advantage is lost.

I learned my assembly language and JCL on an IBM mainframe and so never had the pleasure of writing assembly for a PDP-11. (I did learn Lisp on a PDP-8, though...) Now I want to go learn about the PDP-11's assembly language so that I can use this example at greater depth in my compilers course next semester.

Favorite Quotes

You've already seen one above. My other favorite is:

Much of the power of C comes from having a powerful preprocessor. The preprocessor is called a programmer.

There were other good ones, but they lack the technical cleanness of the best because they could well be said of other languages. Examples from this category include "By analysis of usenet source, the hardest part of C to use is the comment." and "Real programmers can write C in any language." (My younger readers may not know what Moylan means by "usenet", which makes me feel old. But they can learn more about it here.)

----

As readers here probably know from earlier posts such as this one, I'm as tempted by a guilty language pleasure as anyone, so I enjoyed Moylan's article. But even if we discount the paper's value for its unabashed advocacy on language matters, we can also learn from his motivation:

I am not so naive as to expect that diatribes such as this will cause the language to die out. Loyalty to a language is very largely an emotional issue which is not subject to rational debate. I would hope, however, that I can convince at least some people to re-think their positions.

I recognise, too, that factors other than the inherent quality of a language can be important. Compiler availability is one such factor. Re-use of existing software is another; it can dictate the continued use of a language even when it is clearly not the best choice on other grounds. (Indeed, I continue to use the language myself for some projects, mainly for this reason.) What we need to guard against, however, is making inappropriate choices through simple inertia.

Just to keep in mind that we have a choice of language each time we start a new project is a worthwhile lesson to learn.

February 21, 2007 5:50 PM

ChiliPLoP 2007 Redux

Our working group had its most productive ChiliPLoP in recent memory this year. The work we did isn't ready for public consumption yet, so I can't post a link just yet, but I am hopeful that we will be able to share our results with interested educators soon enough. For now, a summary.

This year, we made substantial progress toward producing a well-documented resource for instructors who want to teach Java and OOP. As our working paper begins:

The following set of exercises builds up a simple application over a number of iterations. The purpose is to demonstrate, in a small program, most of the key features of object-oriented programming in Java within a period of two to three weeks. The course can then delve more deeply into each of the topics introduced, in whatever order the instructor deems appropriate.

An instructor can use this example to lay a thin but complete foundation in object-oriented Java for an intro course within the first few weeks of the semester. By introducing many different ideas in a simple way early, the later elements of the course can be ordered at the instructor's discretion. So many other approaches to teaching CS 1 create strict dependencies between topics and language constructs, which limits the instructor's approach over the course of the whole semester. The result is that most instructors won't adopt a new approach, because they either cannot or do not want to be tied down for the whole semester. We hope that our example enables instructors to do OO early while freeing them to build the rest of their course in a way that fits their style, their strengths and interests, and their institution's curriculum.

Our longer-term goal is that this resource serve as a good example for ourselves and for others who would like to document teaching modules and share them with others. By reducing external dependencies to a minimum, such modules should assist instructors in assembling courses that use good exercises, code, and OO programming practice.

... but where are the patterns? Isn't a PLoP conference about patterns? Yes, indeed, and that is one reason that I'm more excited about the work we did this week than I have been in a while. By starting with a very simple little exercise, growing progressively into an interesting simulation via short, simple steps, we have assembled both a paradigmatic OO CS 1 program and the sequence of changes necessary to grow it. To me, this is an essential step in identifying the pattern language that generates the program. I may be a bit premature, but I feel as if we are very close to having documented a pattern sequence in the Alexandrian sense. Such a pattern sequence is an essential part of a pattern-oriented approach to design, and one that only a few people -- Neil Harrison and Jim Coplien -- have written much about. And, like Alexander's idea of pattern diagnosis, pattern sequences will, I think, play a valuable role in how we teach pattern-directed design. My self-assigned task is to explore this extension of our ChiliPLoP work while the group works on filling in some details and completing our public presentation.

One interesting socio-technical experiment we ran this week was to write collaboratively using a Google doc. I'm still not much a fan of browser-based apps, especially word processors, but this worked out reasonably well for us. It was fast, performed autosaves in small increments, and did a great job handling the few edit conflicts we caused in two-plus days. We'll probably continue to work in this doc for a few more weeks, before we consider migrating the document to a web page that we can edit and style directly.

Two weeks from today, the whole crew of us will be off to SIGCSE 2007, which is an unusual opportunity for us to follow up our ChiliPLoP and hold ourselves accountable for not losing momentum. Of course, two weeks back home like this would certainly wipe my mind clear of any personal momentum I have built up, so I will need to be on guard!

Posted by Eugene Wallingford | Permalink | Categories: Patterns, Software Development, Teaching and Learning

February 20, 2007 11:58 PM

A State Conformable to Nature

In the beginning, I blogged on the danger of false pride and quoted the Stoic philosopher Epictetus. Now I have encountered another passage from Epictetus, at the mind hacker site Hacks

When you are going about any action, remind yourself what nature the action is. If you are going to bathe, picture to yourself the things which usually happen in the bath: some people splash the water, some push, some use abusive language, and others steal. Thus you will more safely go about this action if you say to yourself, "I will now go bathe, and keep my own mind in a state conformable to nature." And in the same manner with regard to every other action. For thus, if any hindrance arises in bathing, you will have it ready to say, "It was not only to bathe that I desired, but to keep my mind in a state conformable to nature; and I will not keep it if I am bothered at things that happen."

The notion of a "state conformable to nature" was central to his injunction against false pride, and here it remains the thoughtful person's goal, this time in the face of all that can go wrong in the course of living. This quote also resonates with me, because, just as I am inclined toward a false pride, I have a predisposition toward letting small yet expectable hindrances interfere with my frame of mind. Perhaps predisposition is the wrong word; perhaps it's just a bad habit.

As is often the case for me, after a second or third positive reference is all I need to commit to reading more. In the coming weeks, I now plan to read the Discourses of Epictetus. We even have a copy on our bookshelf at home. (My wife's more classical education proves useful to me again!)

February 19, 2007 11:39 PM

How to Really Succeed in Research...

... when you work at a primarily undergraduate school.

My Dean sent me a link to Guerrilla Puzzling: a Model for Research (subscription required), a Chronicle Observer piece by Marc Zimmer. The article describes nature as "full of intriguing puzzles for researchers to solve". Unlike the puzzles we buy at the store, the picture isn't known ahead of time, and the shape and number of pieces aren't even known. Scientists have "to find the pieces before trying to place them in the puzzle."

From this analogy, Zimmer argues that researchers at schools devoted to undergraduates can make essential and valuable contributions to science despite lacking the time, manpower, and financial resources available to scientists at our great research institutions. While some at the elite liberal arts colleges do so by mimicking the big-school model on a smaller scale, most do some by complementing -- not competing with -- the efforts of major research programs.

The biggest disadvantage of doing research at an undergraduate institution is the different time scale. An undergraduate is in school for only four years or so, and the typical undergrad is capable of contributing to a project for much less time, perhaps a year or two. In computer science, where even empirical science often requires writing code, the window of opportunity can be especially small. My hardest adjustment in going from graduate school researcher to faculty researcher was the speed with which students, even master's students, moved from entering the lab to collecting their sheepskin. Just as we felt comfortable and productive together, the student was gone.

Zimmer points out one positive in this pace: the implicit license to take bigger chances. When one's grad students depend on successful projects to land and hold their future jobs, an advisor feels some moral duty to select projects with reasonable chances for success. An undergrad, on the other hand, benefits just from participating in research. Thus the advisor can take a chance on a project with higher reward/risk profile.

How is the researcher at the primarily undergraduate institution to succeed? Via what he calls "guerilla" puzzling:

- Start working in a new area, while the big guys are still writing the grants they need to get started, and pick off

the easiest problems.

This strategy requires a pretty keen sense of one's field. But it can also be helped along by listening to deep thinkers and making connections.

One of our faculty members is collaborating on a grid computing project with a local data center, and they are attacking a particular set of questions that bigger schools and more prominent industrial concerns haven't yet been able to pin down yet. Sometimes, it's easier to be agile when you're small.

- Start working in an area whose solutions seem to generate new problems.

It seems to me that certain parts of the theoretical CS world work this way. My former student's work applying the "theory of networks" to Linux package relationships fits into an unfolding body of work where every new application of network ideas creates a set of questions that sustain the next round of study. Another faculty member here has built a productive career by following a trail of generative questions in medical informatics and database organization and search.

- Start working on distinctive, attractive problems.

I'm not sure I get this one. Why aren't the big guys working on these? Presumably, they can see them as well as the researcher at the undergrad school, and they have the resources they need to move to dominate the problem.

Sometimes, small-school researchers can create a niche in their problem space by attacking a well-defined, focused problem, solving it, and then moving on to another. In some ways, computer science is more amenable to this straightforward approach. Unless you work in a "big iron" area of computing like supercomputing, the lab equipment that one needs is pretty simple and not beyond the financial means of anyone. And these days one can even do very interesting projects in parallel and distributed computing using clusters of commodity processors. So a CS researcher at an undergrad institution can compete on a focused problem nearly as well as someone at a larger school. The primary advantage of the large- school program is in numbers -- an army of grad students can deforest a problem area pretty quickly, and it can be agile, too.

One thing is for certain: A scientist at a primarily undergrad school has to think consciously about how to build a research program, and mimicking one's Research I advisor isn't likely the most reliable path to success.

February 19, 2007 12:03 AM

Being Remembered

Charisma in a teacher is not a mystery or a nimbus of personality, but radiant exemplification to which the student contributes a corresponding radiant hunger to becoming.

-- William Arrowsmith

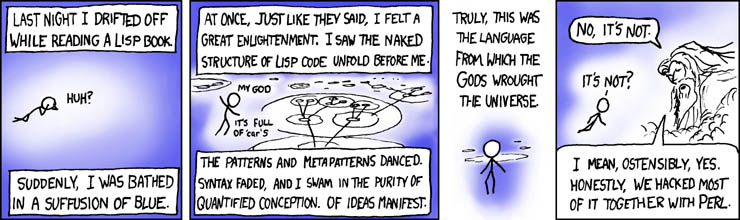

On a day when I met with potential future students and their parents, several former students of mine sent me links to this xkcd comic:

I laughed out loud twice while reading it, first at the line "My god, it's full of cars." and then at the final panel nod at a postmodern god. The 'cars' line was a common subject line in the messages I received.

As these messages rolled in, I also felt a great sense of honor. Students whom I last saw two or seven or ten years ago thought enough of their time studying here to remember me upon reading this comic and then send me an e-mail message. All studied Programming Languages and Paradigms with me, and all were affected in some lasting way by their contact with Scheme. One often hears about how a teacher's effect on the world is hard to measure, like a ripple on the water sent out into the future. I am humbled that some really neat people out in this world recall a course they took with me.

Of course, I know that a big part of this effect comes from the beauty of the ideas that I am fortunate enough to teach. Scheme and the ideas it embodies have the power to change students who approach it with a "radiant hunger to becoming". I am merely its its channel. That is the great privilege of scholars and teachers, to formulate and share great ideas, and to encourage others to engage the ideas and make them grow.

February 18, 2007 4:31 PM

One Problem I Need Not Worry About

Indeed, for those of you with a strong, charismatic personality, it is worthwhile to consider the idea that charisma can be as much a liability as an asset. Your strength of personality can sow the seeds of problems, when people filter the brutal facts from you. You can overcome the liabilities of having charisma, but it does require conscious attention.

-- James Collins, Good to Great, page 73

I may have to worry about folks not telling me the brutal facts, but it won't be due to the force of my charisma.

Good to Great offers folks like me other assurances as well. Helping a group to transform itself from good to great is usually more a matter of working with the right people, listening to the facts, and discipline than it does with charisma or vision.

February 18, 2007 4:25 PM

Respite in Hard Work, Done Somewhere Else

As I prepared to leave for ChiliPLoP 2007, I looked to the trip as almost a vacation. While I will be working steadily through the 76 hours or so in Arizona, both on elementary patterns and various and sundry school duties, I will be off the treadmill that have been the last few days. I've hardly had time to breathe since Wednesday morning:

Wednesday: Prepare for and sit in on meetings all day, including a faculty meeting. Scramble to make last-minute changes to our fall semester schedule before the secretary goes on vacation. Exercise for an hour with my older daughter in her ballet class. I was in the class, and did all of the "floor barre" work that the rest of the class did. (At least that was fun time with Sarah!) For an old guy, I did all right. Sprint home to pack a quick overnight bag. Drive two hours to Des Moines. Crash in motel bed.

Thursday: Attend 7:00 AM breakfast at the State Capitol, sponsored by the Department of Economic Development to encourage state legislators to fully fund the department's budget request for 2007-2008. Mingle with legislators, and visited with IT bigwigs from most of the major players in the state. After two hours, drive two hours back home. Prepare for class. Meet my Programming Languages class. Attend meeting. Give dinner talk to local Kiwanis club on my department's efforts to participate in state economic development through curriculum and research projects that partner with regional companies. Crash in my own bed.

Friday: Arrive at office by 6:30 AM to do some leftover work. Coach team of local eighth graders who are preparing for a math competition. After several months, the competition is here -- tomorrow. Spend four hours visiting with prospective students and their parents. Scramble all afternoon to tie up loose ends in the office. Take one daughter to orchestra practice and then to play rehearsal. Pack for ChiliPLoP.

Saturday: Take eighth graders to math competition at 8:00 AM. Fill time and watch until 1:30. Take one daughter to play rehearsal. Kiss other daughter, already at rehearsal, good-bye for a few days. Head home, kiss wife good-bye, load bags in car, and hit road for 3+ hour drive to Minneapolis. (Take short nap along the way.) Grab dinner with friend. Crash on friend's couch.

Sunday: Rise at 5 AM for ride to airport. Encounter usual delays. Board plan at 6:30 AM for 7:00 AM flight. Then ... sit for two hours before take-off as plane requires computer system maintenance. I don't usually think of a two-hour in-plane delay as a respite, but this one was. I took a nap!

We are now in the air, approaching Phoenix. ChiliPLoP, as busy as it always, made more busy by some necessary work from back home, will seem a break.

This sort of week may be no big deal to my consultant friends, or maybe even to my academic friends with big outreach portfolios. But it's new to me. While each activity of the week offered value, I found myself looking forward to the TSA and the plane. Extra time of the plane didn't bother me.

Landing will be better. Work on computer science with some of my favorite colleagues will be better.

A run in the sun will be better.

February 16, 2007 8:53 PM

A Partial Verdict

I think the verdict is in on the nature of my students this semester: The class is a mixed bag. Many students are quietly engaged. The rest are openly skeptical. It's not a high-positive energy crowd that can drive a semester forward, but I think there is a lot of hope for where many of these students can end up. The rest will likely prefer merely to survive to the end of the course and then move on to what really interests them. I hope that all can enjoy at least part of the ride.

February 16, 2007 8:45 PM

Suit Up!

This just in from my favorite new TV character in recent years, Barney Stinson of How I Met Your Mother:

Here's how you run a marathon:

Step 1: Start running.

<pause>

Oh, yeah -- there's no Step 2!

After five weeks of almost no running, I started running this week. Unlike Barney, though, I stopped after just three miles Monday morning. But then I did it again and again on Tuesday and Wednesday. Then, after a day on the road playing University Lobbyist, I started again on Friday, this time for 3.5 miles. It feels good to be well enough to run any day I can. Indeed, it feels good just to run. My legs feel fine even after the layoff, probably because I've remained patient on distance and speed.

Unlike my friend Barney, who ran and finished the New York Marathon without training, only to find several hours later that he no longer had control of his legs. This is one of the few experiences that I share with Barney. Even on television, the truth can catch up with you.

Suit up! Start running.

February 12, 2007 8:15 PM

3 Out Of 5 Ain't Bad

... with apologies to Meat Loaf.

Last week, I accepted the Week of Science Challenge. How did I do? On sheer numbers, not so well. I did manage to blog on a CS topics three of the five business days. That's not a full week of posts, but it is more than I have written recently, especially straight CS content.

On content, I'm also not sure I did so well. The first post, on programming patterns across paradigms, is a good introduction to an issue of longstanding interest to me, and it caused me to think about Felleisen's important paper. The second post, on the beautiful unity of program and data, was not what I had hoped for it. I think I overreached, trying to bring too many different ideas into a single short piece. It neither needs to be a lot longer, or it needs a sharper focus. At least I can say that I learned something while writing the essay, so it was valuable to me. But it needs another draft, or three, before it's in a final state. Finally, my last piece of the week, turned out okay for a blog entry. Computer Science -- at least a big part of the varied discipline that falls under this umbrella -- is science. We in the field need to do a better job helping people to know this.

Accepting the challenge served me well by forcing me to write. That sort of constraint can be good. Because I had to write something, even if not borne away by a flash of inspiration, it forced me to write about something that required extra effort at that moment, and to write quickly enough to get 'er done that day. These constraints, too, can boost creativity, and help build the habit of writing where it has fallen soft in the face of too many other claims on time. In some ways, writing those essays felt like writing essay exams in college!

I think that I would probably have wanted to write about all of these ideas at some point later, but without the outside drive to write now I would probably have deferred them until a better time, until I was "ready". But would I ever? It's easy for me to wait so long that the idea is no longer fresh enough for me to write. An interesting Writing Down The Bones-like exercise might be for me to grab an old ideas file and use a random-number generator to pick out one topic every day for a week or so -- and then just write it.

As for the pieces produced this week, I can imagine writing more complete versions of the last two some day, when time, an inspiration, or a need hits me.

As I forced myself to look for ideas every day, I noticed my senses were heightened. For example, one evening last week I listened to an Opening Move podcast with Scott Rosenberg, author of Dreaming in Code. This book is about the many-years project of Mitch Kapor to build the ultimate PIM Chandler. During the interview, Rosenberg comments that software is "thought stuff", not subject to the laws of physics. As we implement new ideas, users -- including ourselves -- keep asking for more. My first thought was, I should update my piece on CS as science. CS helps to ask and to answer fundamental questions about what we could reasonably ask for, how much is too much, and what we will have to give up to get certain features or functionality. What are the limits to what we can ask for? Algorithms, theory, and experiment all play a role.

Maybe in my next draft.

February 12, 2007 7:27 PM

A New Start

I had a new experience running this morning. I ran! That seemed novel, given my inability to run the last few weeks. Due to a persistent something that kept me at 50-90% strength since January 5, I hadn't run in 2 weeks and a weekend; I had managed to run once in the week before that, and twice in each of the two weeks before that, for a total of five runs in five weeks. An expected result of this off-time was a loss of fitness. An unexpected result was a certain loss of memory. Dressing and preparing for the run felt odd. I'm a creature of habit, and two weeks is a good start on new habits.

Nothing could have made my first run in so long more enjoyable than one of the great pleasures of an early-morning runner. Last evening, we received a little under an inch of wet snow, so this morning my first steps broke fresh snow. The crunch under my feet made the run seem new in more than one way.

True to my supposition from a couple of weeks ago, I plan to take my restart slow and steady. So today I ran a slow and easy three miles. My three-mile routes have not been put to much use in the last year or so, so the route itself seemed new, too. I didn't worry about speed. Instead I merely focused on the feeling in my lungs as they worked for the first time in a couple of weeks, on the feeling in my legs as they pushed me forward, in my hips as they adjusted to the slightly uncertain steps on wet snow. It was good.

February 08, 2007 6:23 PM

Computer Science as Science

If you're in computer science, you've heard the derision from others on campus. "Any discipline with 'science' in the name isn't." Or maybe you've heard, "What we really need on campus is a department of toaster oven science." These comments reflect a deep misunderstanding of what computing is, or what computer scientists do. We haven't always done a very good job telling our story. And a big part of what happens in the name of CS really isn't science -- it's engineering, or (sadly) technical training. But does that mean that no part of computer science is 'science'?

Not at all. Computer science is grounded in a deep sense of empiricism, where the scientific method plays an essential role in the process of doing computer science. It's just that the entities or systems that we study don't always spring from the "natural world" without man's intervention. But they are complex systems that we don't understand thoroughly yet -- systems created by technological processes and by social processes.

I mentioned an example of this sort of research from my own backyard back in December 2004. A master's student of mine, Nate Labelle, studied relationships among open-source software packages. He was interested in seeing to what extent the network of 'imports' relationships bore any resemblance to the social and physical networks that had been studies deeply by folks such as Barabasi and Watts. Nate conducted empirical research: he mapped the network of relationships in several different flavors of Linux as well as a few smaller software packages, and then determined the mathematical properties of the networks. He presented this work in a few places, including a paper at the 6th International Conference on Complex Systems. He concluded that:

... despite diversity in development groups and computer system architecture, resource coupling at the inter-package level creates small-world and scale-free networks with a giant component containing recurring motifs; which makes package networks similar to other natural and engineered systems.

This scientific result has implications for how we structure package repositories, how we increase software robustness and security, and perhaps how we guide the software engineering process.

Studying such large systems is of great value. Humans have begun to build remarkably complex systems that we currently understand only surface deep, if at all. That's is often a surprise to non-computer scientists and non-technology people: The ability to build a (useful) system does not imply that we understand the system. Indeed, it is relatively easy for me to build systems whose gross-level behavior I don't yet understand well (using a neural network or genetic algorithm) or to build systems that perform a task so much better than I that it seems to operate on a completely different level than I do. A lot of chess and other game-playing programs can easily beat the people who wrote them!

We can also apply this scientific method to study processes that occur naturally in the world using a computer. Computational science is, at its base, a blend of computer science and another scientific domain. The best computational scientists are able to "think computationally" in a way that qualitatively changes their domain science.

As Bertrand Russell wrote a century ago, science is about description, not logic or causation or any other naive notion we have about necessity. Scientists describe things. This being the case, computer science is in many ways the ultimate scientific endeavor -- or at least a foundational one -- because computer science is the science of description. In computing we learn how to describe thing and process better, more accurately and more usefully. Some of our findings have been surprising, like the unity of data and program. We've learned that process descriptions whose behavior we can observe will teach us more than static descriptions of the same processes left to the limited interpretative powers of the human mind. The study of how to write descriptions -- programs -- has taught us more about language and expressiveness and complexity than our previous mathematics could ever have taught us. And we've only begun to scratch the surface.

For those of you who are still skeptical, I can recommend a couple of books. Actually, I recommend them to any computer scientist who would like to reach a deeper understanding of this idea. The first is Herb Simon's seminal book The Sciences of the Artificial, which explains why the term "science of the artificial" isn't an oxymoron -- and why thinking it is is a misconception about how science works. The second is Paul Cohen's methodological text Empirical Methods for Computer Science, which both teaches computer scientists -- especially AI students -- how to do empirical research in computer science. Along the way, he demonstrates the use of these techniques on real CS problems. I seem to recall examples from machine learning, which is perticularly empirical in its approach.

February 06, 2007 10:31 PM

Basic Concepts: The Unity of Data and Program

I remember vividly a particular moment of understanding that I experienced in graduate school. As I mentioned last time, I was studying knowledge-based systems, and one of the classic papers we read was William Clancey's Heuristic Classification. This paper described an abstract decomposition for classification programs, the basis of diagnostic systems, that was what we today would call a pattern. It gave us the prototype against which we could pattern our own analysis of problem-solving types.

In this paper, Clancey discussed how configuration and planning are two points of view on the same problem, design. A configuration system produces as output an artifact capable of producing state changes in some context; a planning system takes such an artifact as input. A configuration takes as input a sequence of desired state changes, to be produced by the configured system; a planning system produces a sequence of operations that produces desired state changes in the given artifact. Thus, the same kind of system could produce a thing, an artifact, or a process that creates an artifact. In a certain sense, things and processes were the same kind of entity.

Wow. Design and planning systems could be characterized by similar software patterns. I felt like I'd been shown a new world.

Later I learned that this interplay between thing and process ran much deeper. Consider this Lisp (or Scheme) "thing", a data value known as a list:

(a 1 2)

If I replace the symbol "a" with the symbol "+", I also have a Lisp list of size 3:

(+ 1 2)

But this Lisp list is also the Lisp program for computing the sum of 1 and 2! If I give this program to a Lisp interpreter, I will see the result:

> (+ 1 2)

3

In Lisp, there is no distinction between data and program. Indeed, this is true for C, Java, or any other programming language. But the syntax of Lisp (and especially Scheme) is so simple and uniform that the unity of data and program stands out starkly. It also makes Scheme a natural language to use in a course on the principles of programming languages. The syntax and semantics of Lisp programs are so uniform that one can write a Lisp interpreter in about a page of Lisp code. (If you'd like, take a look at my implementation of John McCarthy's Lisp-in-Lisp, in Scheme, based on Paul Graham's essay The Roots of Lisp. If you haven't read that paper, please do soon.)

There is no distinction between data and program. This is one of the truly beautiful ideas in computer science. It runs through everything that we do, from von Neumann's stored program computer, itself to the implementation of a virtual machine for Java to run inside a web browser.

A related idea is the notion that programs can exist at arbitrary levels of abstraction. For each level at which a program is data to another program, there is yet another program whose behavior is to produce that data. An assembler produces machine language from assembly language.

- A compiler produces assembly language from a high-level program.

- The program-processing programs themselves can be produced. From a language grammar, lex and yacc produce components of the compiler.

- One computer can pretend to be another. Windows can emulate DOS programs. Mac OS X can run old-style OS 9 programs in its Classic mode. Intel-based Macs can run programs compiled for a PowerPC-based Mac in its Rosetta emulation mode.

One of the lessons of computer science is that "machine" is an abstract idea. Everything can be interpreted by someone -- or something -- else.

I don't know enough of the history of mathematics or natural philosophy to say to what extent these ideas are computer science's contributions to our body of knowledge. On the one hand, I'm sure that deep thinkers throughout history at least had reason and resource to make some of the connections between thing and process, between design and planning. On the other, I imagine that before we had digital computers at our disposal, we probably didn't have sufficient vocabulary or the circumstances needed to explore issues of natural language to the level of program versus data, or of languages being processed from abstract to concrete, down to the details of a particular piece of hardware. Church. Turing. Chomsky. McCarthy. These are the men who discovered the fundamental truths of language, data, and program, and who laid the foundations of our discipline.

At first, I wondered why hadn't I learned this small set of ideas as an undergraduate student. In retrospect, I'm not surprised. My alma mater's CS program was aimed at applications programming, taught a standard survey-style programming languages course, and didn't offer a compilers course. Whatever our students here learn about the practical skills of building software, I hope that they also have the chance to learn about some of the beautiful ideas that make computer science an essential discipline in the science of the 21st century.

February 05, 2007 8:46 PM

Programming Patterns and "The Conciseness Conjecture"

For most of my research career, I have been studying patterns in software. In the beginning didn't think of it in these terms. I was a graduate student in AI doing work in knowledge-based systems, and our lab worked on so-called generic tasks, little molecules of task-specific design that composed into systems with expert behavior. My first uses of the term "pattern" were home-grown, motivated by an interest to help novice programmers recognize and implement the basic structures that made up their Pascal, Basic, and C programs. In the mid-1990s I came into contact with the work of Christopher Alexander and the software patterns community, and I began to write up patterns of both sorts, including Sponsor-Selector, loops, Structured Matcher, and even elementary patterns of Scheme programming for recursion. My interest turned to the patterns at the interface between different programming styles, such as object-oriented and functional programming. It seemed to me that many object-oriented design patterns implemented constructs that were available more immediately in functional languages, and I wondered whether it were true that patterns in any one style would reflect the linguistic shortcomings of the style, implementing ideas available directly in another style.

My work in this area has always been more phenomenological than mathematical, despite occasional short side excursions into promising mathematics like group and category theory. I recall a 6- to 9-month period five or six years ago when a graduate student and I looked group theory and symmetries as a possible theoretical foundation for characterizing relationships among patterns. I think that this work still holds promise, but I have not had a chance to take it any further.

Only recently did I finally read Matthias Felleisen's 1991 paper On the Expressive Power of Programming Languages. I should have read it sooner! This paper develops a framework for comparing languages on the basis of their expressiveness powers and then applies it to many of the issues relevant to language research of the day. One section in particular speaks to my interest in programming patterns and shows how Felleisen's framework can help us to discern the relative merits of more expressive languages and the patterns that they embody. This section is called "The Conciseness Conjecture". Here is the conjecture itself:

Programs in more expressive programming languages that use the additional features in a sensible manner contain fewer programming patterns than equivalent programs in less expressive languages.

Felleisen gives a couple of examples to illustrate his conjecture, including one in which Scheme with assignment statements realizes the implementation of an stateful object more concisely, more clearly, and with less convention than a purely functional subset of Scheme. This is just the sort of example that led me to wonder whether functional programming's patterns, like OOP's patterns, embodied ideas that were directly expressible in another style's languages -- a Scheme extended with a simple object-oriented facility would make implementation of Felleisen's transaction manager even clearer than the stateful lambda expression that switches on transaction types.

Stated as starkly as it is, I am not certain I believe the conjecture. Well, that's not quite true, because in one sense it is obviously true. A more expressive language allows us to write more concise code, and less code almost always means fewer patterns. This is true, of course, because the patterns reside in the code. I say "almost always" because there is an alternative to fewer patterns in smaller code: the same number of patterns, or more, in denser code!

If we qualify "fewer programming patterns" as "fewer lower-level programming patterns", then I most certainly believe Felleisen's conjecture. I think that this paper makes important contribution to the study of software patterns by giving us a vocabulary and mechanism for talking about languages in terms of the trade-off between expressiveness and patterns. I doubt that Felleisen intended this, because his section on the Conciseness Conjecture confirms his uneasiness with pattern-driven programming. "The most disturbing consequence," he writes, of programming patterns is that they are an obstacle to understanding of programs for both human readers and program-processing programs." For him, an important result of his paper is to formalize "how the use of expressive languages seems to be the ability to abstract from programming patterns with simple statements and to state the purpose of a program in the concisest possible manner."

This brings me back to the notions of "concise" and "dense". I appreciate the goal of using the most abstract language possible to write programs, in order to state as unambiguously and with as little text as possible the purpose and behavior of a program. I love to show my students how, after learning the basics of recursive programming. they can implement a higher-order operation such as fold to eliminate the explicit recursion from their programs entirely. What power! all because they are using a language expressive enough to allow higher-order procedure. Once you understand the abstraction of folding, you can write much more concise code.

Where is the down side? Increasing concision ultimately leads to a trade-off on understandability. Felleisen points to the danger that dispersion poses for code readers: in the worst case, it requires a global understanding of the program to understand how the program embodies a pattern. But at the other end of the spectrum is the danger posed by concision: density. In the worst, the code is so dense as to overwhelm the reader's sense. If density were an unadulterated good, we would all be programming in a descendant of APL! The density of abstraction is often the reason "practical" programmers cite for not embracing functional programming is the density of the abstractions one finds in the code. It is an arrogance for us to imply that those who do not embrace Scheme and Haskell are simply not bright enough to be programmers. Our first responsibility is to develop means for teaching programmers these skills better, a challenge that Felleisen and his Teach Scheme! brigade have accepted with great energy. The second is to consider the trade-off between concision and dispersion in a program's understandability.

Until we reach the purest sense of declarative programming, all programs will have patterns. These patterns are the recurring structures that programmers build within their chosen style and language to implement behaviors not directly supported by the language. The patterns literature describes what to build, in a given set of circumstances, along with some idea of how to build the what in a way that makes the most of the circumstances.

I will be studying "On the Expressive Power of Programming Languages" again in the next few months. I think it has a lot more to teach me.

February 04, 2007 10:54 PM

2006 Running in Review, A Little Tardy

February 2007 is already here, and I still haven't written up my "running year in review" post, as I did for both 2005 and 2004. This lack or urgency derives in part from a lack of excitement about what I accomplished in 2006, but mostly reflects busyness at work and a persistent low-grade illness of some sort that has kept me off the road for most of 2007 thus far and so has me thinking less about running than I might otherwise.

After a couple of years of big increases, last year I saw a decrease in mileage for the year, though not that much of a decline:

- 2006: 1932.9 miles

- 2005: 2137.7 miles

- 2004: 1907.0 miles

- 2003: 1281.8 miles

Even though I had my second biggest year ever in terms of miles on the road, in most other ways it was a less satisfying year than in the past. I started the year well enough but then hit a tough stretch in March and April when my hamstrings and feet caused me grief. I had never dealt with running injuries, whether momentary or chronic, before. This pain was chronic, and only rest can do much for them.

This is almost my first year ever when I never PRed a single distance -- not 5K, half marathon, or marathon. I didn't race a single 5K this year, which is a shame, because my summer speed training probably had me in the right shape to do well. My spring of soreness affected my training for the Sturgis Falls half marathon in June. While I missed a PR by a good 4 minutes, I still managed to run my second best time ever at that distance. I felt pretty good going into my marathon training plan, and it went well. But there is no need to recount my experience at the Twin Cities Marathon, which resulted in my worst time ever. I was lucky, perhaps foolhardy, to have finished the race at all.

The year ended in a bit of a down note, as I never quite seemed to recover from the marathon, as much mentally as physically. My post-marathon mileage was okay, though -- just no "zip".

As I mentioned earlier, 2007 has started slowly. I've been under the weather since January 5(!), and the last two weeks have been the worst of all. The silver lining in not running much these last many weeks may well be that my body and mind have had a chance to rest that I never would have given them otherwise. I think I'll take this opportunity to start again from scratch. Maybe I'll get my "zip" back, both in speed and mindset.

(Another silver lining may be the natural excuse it gives me for not running during our recent cold snap. Last night, we reached -15 degrees F., and tonight we'll get down to -17. The highs aren't making it to 0 degrees, either. Truth be told, though, I like being able to say that I've run in such conditions!)

February 02, 2007 6:13 PM

Recursing into the Weekend

Between meetings today, I was able to sneak in some reading. A number of science bloggers have begun to write a series of "basic concepts" entries, and one of the math bloggers wrote a piece on the basics of recursion and induction. This is, of course, a topic well on my mind this semester as I teach functional programming in my Programming Languages course. Indeed, I just gave my first lecture on data-driven recursion in class last Thursday, after having given an introduction to induction on Tuesday. I don't spend a lot of time on the mathematical sort of recursion in this course because it's not all that relevant to to the processing of computer programs. (Besides, it's not nearly as fun!)

This would would probably make a great "basic concepts in CS" post sometime, but I don't have time to write it today. But if you are interested, you can browse my lecture notes from the first day of recursive programming techniques in class.

(And, yes, Schemers among you, I know that my placement of parentheses in some of my procedures is non-standard. I do that in this first session or so so that students can see the if-expression that mimics our data type stand out. I promise not to warp their Scheme style with this convention much longer.)

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

February 02, 2007 5:57 PM

Week of Science Challenge, Computer Science-Style

I don't consider myself a "science blogger", because to me computer science is much more than just science. But I do think of Knowing and Doing as a place for me to write about the intellectual elements of computer science and software development, and there is a wonderful, intriguing, important scientific discipline at the core of all that we do. So when I ran across the Week of Science Challenge in a variety of places, I considered playing along. Doing so would mean postponing a couple of topics I've been thinking about writing about, and it would mean not writing about the teaching side of what I do for the next few days. Sometimes, when I come out of class, that is all I want to do! But taking the challenge might also serve as a good way to motivate myself to write on some "real" computer science issues for a while again. And that would force me to read and think about this part of my world a bit more. Given the hectic schedule I face on and off campus in the next four weeks, that would be a refreshing intellectual digression -- and a challenge to my time management skills.

I have decided to pick up the gauntlet and take the challenge. I don't think I can promise a post every day during February 5-11, or that my posts will be considered "science blogs" by all of the natural scientists who consider computer science and software development as technology or engineering disciplines. But let's see where the challenge leads. Watch for some real CS here in the next week...